TimeMachine soooo, yester-year?

Unix bash Backup

The way Apples Time Machine works is really admireable. It is a fascinatingly simple solution in principle: Every incremental backup is a collection of hard links to the last backup. Then only the Files that have been changed are replaced with and updated copy.

elegant ?

This seems to be a very crude method to make incremental backups. If a large file changes, the whole file is copied. Most of the time only small files change on the average desktop computer (the intended audience).

elegant !

The elegant part of this solution is, that it does not require specialized software to restore any backup from a TimeMachine drive, as long as the disk can be mounted (usually HFS+ and encryption). Every backup presents the user a complete set of files to browse trough. No need to stitch together mutliple folders with aprtial backups that have been created over time.

Can we use it on other systems ?

Yes, if the file system does support hard links, this should work. Most *nix

file systems such as ext2/3/4, btrfs, zfs support hard links. I

believe even NTFS has this capability (FAT, et all, however can’t).

but how?

It can be achieved pretty simply by the following two steps:

1. copy

copy all files and folders, to a new location as hard links

1cp -al <last_backup> <dst>

The -l option creates a copy of hard links.

2. rsync the difference

Use rsync to copy only changed files, replacing the hard link

in <dst> with the changed file:

1rsync --delete <src> <dst>

That’s basically it, simple!

real world usage

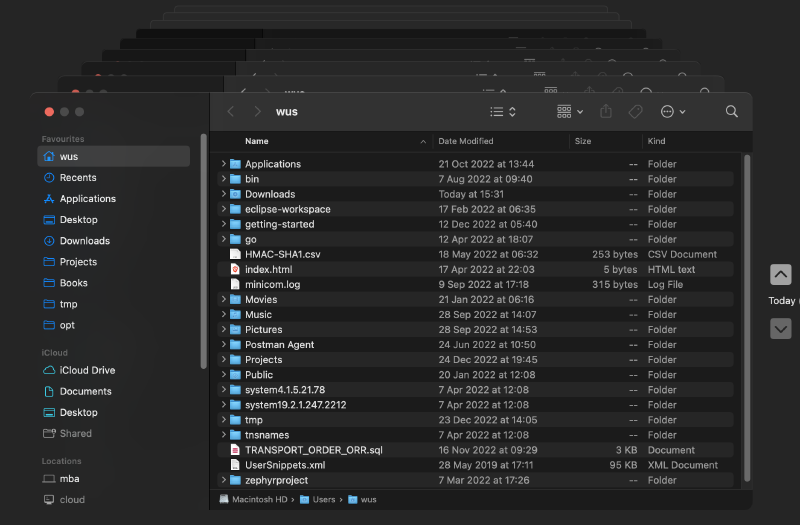

I am using this principle to backup all my data on my home nas to an

external disk. The script is called MachineTime.sh:

1#!/usr/bin/env bash

2# -*- tab-width: 4; encoding: utf-8; -*-

3#

4#########

5# License:

6#

7# DO WHAT THE FUCK YOU WANT TO PUBLIC LICENSE

8# Version 2, December 2004

9#

10# Copyright (C) 2022 Simon Wunderlin <wunderlins@gmail.com>

11#

12# Everyone is permitted to copy and distribute verbatim or modified

13# copies of this license document, and changing it is allowed as long

14# as the name is changed.

15#

16# DO WHAT THE FUCK YOU WANT TO PUBLIC LICENSE

17# TERMS AND CONDITIONS FOR COPYING, DISTRIBUTION AND MODIFICATION

18#

19# 0. You just DO WHAT THE FUCK YOU WANT TO.

20#

21#########

22#

23## @file

24## @author Simon Wunderlin <wunderlins@gmail.com>

25## @brief Hardlink Backup Script using rsync

26## @copyright WTFPLv2

27## @version 1

28## @details

29##

30## @par Synopsis

31##

32## This script creates incremental backups by hard linking

33## all files from the last backup and only replacing changed files.

34##

35## This is achived by using the built in `cp -al <src> <dst>` command (`-al`

36## makes a copy of hard links) and `rsync --delete <last_backup> <dst>`

37## to replace changed files.

38##

39## @par Usage

40##

41## ```bash

42## backup.sh [-f] <backup_base> <src_dir_1> [<src_dir_2> [<src_dir_3> [...]]

43## backup.sh [-f] -c <config.ini>

44## ```

45##

46## - `-f` (optional) @n

47## Full backup, otherwise incremental

48## - `-c` <config.ini> @n

49## Take all parameters fom this config file

50## - `backup_base` @n

51## base directory of backups

52## - `src_dir_N` @n

53## folder to backup

54##

55## @par config.ini

56##

57## Example configuration file

58##

59## ```ini

60## [main]

61## backup_root = /srv/data/bkp

62## src_dir = /srv/data/test

63## src_dir = /srv/data/test2

64## ```

65##

66## @par Configuration

67##

68## some parameters can be configured in the script or by setting

69## environment variables:

70##

71## - `SVC_NAME`: @n used as service name in logger

72## - `NICENESS`: @n process nice (0 neutral, 19, lowest, -20 highes priority)

73##

74## @par Permanent Mount in OpenWRT

75##

76## Example on hwo to add a permanent mount for the backup disk in openwrt

77## with the `uci` command:

78##

79## gives partition info, especially uid. Helps identify new usb drives easily.

80##

81## ```bash

82## block detect

83## ```

84## show current mount configuration

85##

86## ```bash

87## uci show fstab

88## ```

89##

90## add new mount and enable it

91## ```bash

92## uci add fstab mount

93## uci set fstab.@mount[-1].target='/mnt/backup'

94## uci set fstab.@mount[-1].uuid='f1036013-33OO-4d7b-b3e8-c0c3f9a8NNNN'

95## uci set fstab.@mount[-1].enabled='0'

96## uci set fstab.@global[0].anon_mount='1'

97## ```

98##

99## review changes:

100## ```bash

101## uci changed

102## ```

103##

104## save changes

105## ```bash

106## uci commit

107## ```

108##

109## activate mount

110## ```bash

111## /etc/init.d/fstab boot

112## ```

113##

114## @see https://github.com/Anvil/bash-doxygen

115## @see https://earlruby.org/2013/05/creating-differential-backups-with-hard-links-and-rsync/

116##

117

118# configuration ################################################################

119

120## @brief this is the name used in the logger

121declare -r SVC_NAME=MachineTime

122## @brief nice value of i/o jobs

123## @details

124## - 19 lowest priority

125## - 0 normal

126## - -20 highrs priority

127declare -r NICENESS=10

128

129# globals ######################################################################

130

131# absolute path to the running script

132declare -r script_dir=$(readlink -f $(dirname $0))

133

134## @brief the current process id

135## @private

136declare PROCID=$$

137

138if [[ -z "$SVC_NAME" ]]; then

139 ## @brief this is the name used in the logger

140 declare SVC_NAME=common.sh

141fi

142

143## @brief total of all copied bytes of all sub directores

144## @private

145declare STATS_COPY_SUM=0

146

147## @brief total of all deleted bytes of all sub directores

148## @private

149declare STATS_DELETE_SUM=0

150

151## @brief the current timestamp, will be used in base directory

152## @private

153declare -r START_DT=$(date +%Y%m%d-%H%M%S)

154

155# setup locations

156## @brief timestamp of the last backup

157## @private

158declare LAST_DT=""

159

160## @brief folder name of the last backup

161## @private

162declare LAST_BASE=""

163

164## @brief full backup or incremental? (default: incremental)

165## @details

166## use `-f` on the command line for full backup

167## @private

168declare FULL_BACKUP=0

169

170## @brief path to global log file

171## @private

172declare global_logfile=""

173

174## @brief base directory of backups

175## @private

176declare BACKUP_BASE=""

177

178## @brief space separated list of source directories, no files allowed

179## @private

180declare SRC_DIRS=""

181

182# functions ####################################################################

183

184## @fn log()

185## @brief log string to console

186## @param message String message to print

187## @return String formated log message

188function log() {

189 ts=`date +"%b %d %H:%M:%S"`

190 echo "${ts} ${USER} ${SVC_NAME}[${PROCID}]: ${1}"

191}

192

193## @fn fail()

194## @brief display error message and exit

195## @param message String Error message

196## @param exit_code int exit code

197function fail() {

198 local msg="$1"

199 log "$msg"

200 exit $2

201}

202

203#if ! which realpath; then # not in path, declare substitute

204#log "declaring function realpath()"

205## @fn realpath()

206## @brief realpath substitute using readlink

207## @param Path realtive path to resolve

208## @retval 0 on success

209## @retval 1 if the path cannot be resolved

210## @return Path absolute path or empty string if the file does not exist

211function realpath() {

212 local file="$1"

213 local resolved=$(readlink -f "$file")

214 local ret=$?

215 echo "${resolved}"

216 return $ret

217}

218#fi

219

220## @fn iniget()

221## @brief Get values from a .ini file

222## @details

223##

224## Return values from an ini file. The special parameter `--list` returns

225## a newline `\n` separated list of sections.

226##

227## If a section contains multiple keys with the same name, a newline `\n`

228## separated list of values is returned.

229##

230## @par usage

231## usage syntax:

232##

233## iniget <file> [--list|<section> [key]]

234##

235## @par examples

236##

237## file.ini:

238##

239## [Machine1]

240## app = version1

241##

242## [Machine2]

243## app = version1

244## app = version2

245## [Machine3]

246## app=version1

247## app = version3

248##

249## Examples:

250## Get a list of sections

251##

252## $ iniget file.ini --list

253## Machine1

254## Machine2

255## Machine3

256##

257## get a list of `key=values` in `section`:

258##

259## $ iniget file.ini Machine3

260## app=version1

261## app=version3

262##

263## get a list of values (one result)

264##

265## $ iniget file.ini Machine1 app

266## version1

267##

268## get a list of values (two results)

269##

270## $ iniget file.ini Machine2 app

271## version2

272## version3

273##

274## loop over results

275##

276## for v in $(iniget file.ini Machine2 app); do

277## echo "val: $v";

278## done

279##

280## @param file path to `.ini` file

281## @param section or `--list` (returns a list of sections)

282## @param key key name to search for

283## @return String list of sections when used with `--list` else values of `section`/`key` combination

284function iniget() {

285 if [[ $# -lt 2 || ! -f $1 ]]; then

286 echo "usage: iniget <file> [--list|<section> [key]]"

287 return 1

288 fi

289 local inifile=$1

290

291 if [ "$2" == "--list" ]; then

292 for section in $(cat $inifile | grep "^\\s*\[" | sed -e "s#\[##g" | sed -e "s#\]##g"); do

293 echo $section

294 done

295 return 0

296 fi

297

298 local section=$2

299 local key

300 [ $# -eq 3 ] && key=$3

301

302 # This awk line turns ini sections => [section-name]key=value

303 local lines=$(awk '/\[/{prefix=$0; next} $1{print prefix $0}' $inifile)

304 lines=$(echo "$lines" | sed -e 's/[[:blank:]]*=[[:blank:]]*/=/g')

305 while read -r line ; do

306 if [[ "$line" = \[$section\]* ]]; then

307 local keyval=$(echo "$line" | sed -e "s/^\[$section\]//")

308 if [[ -z "$key" ]]; then

309 echo $keyval

310 else

311 if [[ "$keyval" = $key=* ]]; then

312 echo $(echo $keyval | sed -e "s/^$key=//")

313 fi

314 fi

315 fi

316 done <<<"$lines"

317}

318

319## @fn cleanup()

320## @brief remove temp files, etc. run before shutdown

321function cleanup() {

322 rm -rf "/tmp/${PROCID}.log" >/dev/null 2>&1 || true

323 log "== Backup finished $(date)"

324 log "Copied/Deleted: $STATS_COPY_SUM/$STATS_DELETE_SUM bytes"

325}

326

327## @fn stats_copy()

328## @brief generate statistics of copied and deleted files

329## @param dst Path of destination folder

330## @param logfile Path to log file

331## @return Integer number of bytes

332function stats_copy() {

333 local dst="$1"

334 local logfile="$2"

335

336 sum_copy_bytes=0;

337 sizes=$(awk -F' ' '/^file: .*[^\/]$/ {print $2}' $logfile);

338 for n in $sizes; do

339 sum_copy_bytes=$(($sum_copy_bytes + $n));

340 done;

341 echo $sum_copy_bytes

342}

343

344## @fn stats_delete()

345## @brief generate statistics of delete files in incremental backup

346## @param dst Path of destination folder

347## @param last Path of reference folder

348## @param logfile Path to log file

349## @return Integer number of bytes

350function stats_delete() {

351 local dst="$1"

352 local last="$2"

353 local logfile="$3"

354

355 sum_delete_bytes=0;

356 files=$(awk '/^deleting / {print $2}' $logfile)

357 for f in $files; do

358 #echo "${last}${f}"

359 size=$(stat -c "%s" "${last}${f}")

360 sum_delete_bytes=$(($sum_delete_bytes + $size));

361 done

362 #log "Deleted ${sum_delete_bytes} bytes from $last"

363 echo $sum_delete_bytes

364}

365

366## @fn backup_full()

367## @brief create a full backup

368## @param dir Path source directory to backup from

369## @param dst Path base destination directory

370## @return Integer exit code of `rsync`, exits if `dst` cannot be created

371function backup_full() {

372 local dir="$1"; shift

373 local dst="$1"; shift

374 log "${dir} → ${dst}"

375

376 mkdir -p "${dst}${dir}" || fail "Failed to create destionation directory" 3

377 nice -n $NICENESS rsync --out-format="file: %l ${dst}${dir}%n%L" -a \

378 "$dir" "${dst}${dir}" | tee -a ${global_logfile} > /tmp/${PROCID}.log

379 local ret=$?

380

381 # statistics

382 copy_bytes=$(stats_copy "${dst}" "/tmp/${PROCID}.log")

383 STATS_COPY_SUM=$(($STATS_COPY_SUM + copy_bytes))

384 log "» Copied/Deleted: $copy_bytes/0 bytes"

385

386 [ "$ret" != "0" ] && log "Error during full backup of ${dir}"

387 return $ret

388}

389

390## @fn backup_incremental()

391## @brief create a full backup

392## @param dir Path source directory to backup from

393## @param dts Path base destination directory

394## @param last Path base destination directory of the last backup

395## @return Integer exit code of `rsync`, exits if `dst` cannot be created

396function backup_incremental() {

397 local dir="$1"; shift

398 local dst="$1"; shift

399 local last="$1"; shift

400 log "${dir} → ${dst} Δ ${last}"

401 #log "dir: $dir"

402 #log "dst: $dst"

403 #log "last: $last"

404

405 mkdir -p $(dirname "${dst}${dir}") || \

406 fail "Failed to create destionation directory" 3

407 # “cp -al” makes a hard link copy

408 nice -n $NICENESS cp -al "${last}${dir}" "${dst}${dir}"

409 nice -n $NICENESS rsync --out-format="file: %l ${dst}${dir}%n%L" \

410 -a --delete "${dir}" "${dst}${dir}" | \

411 tee -a ${global_logfile} > /tmp/${PROCID}.log

412 local ret=$?

413

414 # statistics

415 copy_bytes=$(stats_copy "${dst}" "/tmp/${PROCID}.log")

416 del_bytes=$(stats_delete "${dst}" "${last}${dir}" "/tmp/${PROCID}.log")

417 STATS_COPY_SUM=$(($STATS_COPY_SUM + copy_bytes))

418 STATS_DELETE_SUM=$(($STATS_DELETE_SUM + del_bytes))

419 log "» Copied/Deleted: $copy_bytes/$del_bytes bytes"

420

421 [ "$ret" != "0" ] && log "Error during incremental backup of ${dir}"

422 return $ret

423}

424

425## @fn usage()

426## @brief show usage

427## @param Path script relative or absolute

428function usage() {

429 echo ""

430 cat <<-EOT

431 usage: $(basename $1) [-f] <backup_base> <src_dir_1> [<src_dir_2> [<src_dir_3> [...]]

432 $(basename $1) [-f] -c <config.ini>

433

434 Parameters:

435 - -f (optional): Full backup, otherwise incremental

436 - -c <config.ini>: config file

437 - backup_base: base directory of backups

438 - src_dir_N: folder to backup

439

440 Example <config.ini>:

441

442 [main]

443 backup_root = /srv/data/bkp

444 src_dir = /srv/data/test

445 src_dir = /srv/data/test2

446

447EOT

448 echo ""

449}

450

451## @fn log_parameters()

452## @brief log all parameters to backup directory

453function log_parameters() {

454# write parameter file

455cat <<-EOT > "${BASE_DIR}/parameters.ini"

456[main]

457backup_root = $BACKUP_BASE

458src_dir = $SRC_DIRS

459full_backup = $FULL_BACKUP

460config_file = $config_file

461

462[variables]

463SVC_NAME = $SVC_NAME

464NICENESS = $NICENESS

465script_dir = $script_dir

466STATS_COPY_SUM = $STATS_COPY_SUM

467STATS_DELETE_SUM = $STATS_DELETE_SUM

468START_DT = $START_DT

469PROCID = $PROCID

470LAST_DT = $LAST_DT

471LAST_BASE = $LAST_BASE

472global_logfile = $global_logfile

473

474EOT

475}

476

477# Main #########################################################################

478if [ -z "$1" ] || [ "$1" = "-h" ] || [ "$1" = "--help" ]; then

479 usage "$0"

480 exit

481fi

482

483log "== Starting backup @ $(date)"

484

485# check if we need to do a full backup or incremental

486[ "$1" == "-f" ] && FULL_BACKUP=1 && shift

487

488# check if we neeed to read aconfig file or if config is provided as parameters

489if [ "$1" == "-c" ]; then

490 config_file="$2"; shift; shift

491 [ ! -f "$config_file" ] && \

492 fail "Config file '$config_file' is not readable" 4

493

494 BACKUP_BASE=$(iniget "$config_file" main backup_root)

495 SRC_DIRS=$(iniget "$config_file" main src_dir | tr '\n' ' ')

496else # read config from command line

497 BACKUP_BASE="$(realpath $1)"; shift

498 for d in $@; do SRC_DIRS="${SRC_DIRS} $(realpath $d)"; done

499fi

500

501# trim SRC_DIR variable

502SRC_DIRS=$(echo "$SRC_DIRS" | sed -e 's,^\s*,,; s,\s*$,,')

503log "Backup base: ${BACKUP_BASE}"

504log "Source directories: ${SRC_DIRS}"

505

506## FIXME: add input parameter checks

507if [ -z "$SRC_DIRS" ] || [ -z "$BACKUP_BASE" ]; then

508 usage "$0"

509 exit 1

510fi

511

512## @brief destination folder for the new backup

513## @private

514declare -r BASE_DIR="${BACKUP_BASE}/${START_DT}"

515global_logfile="${BASE_DIR}/${START_DT}-backup.log"

516

517# for incremental backups, we need to find a reference backup

518if [ "$FULL_BACKUP" != "1" ]; then

519 # reference lcoations for incremental backup

520 LAST_DT=$(ls -1 $BACKUP_BASE 2>/dev/null | grep '[0-9-]\+' | sort -r | head -n1)

521 LAST_BASE="${BACKUP_BASE}/${LAST_DT}"

522 # if we do not find at least on old folder, we

523 # cannot create an incremental backup, so abort

524 [ -z "$LAST_DT" ] && fail "Existing folder required for incremental backup" 2

525 log "Running incremental backup Δ ${LAST_BASE}"

526else

527 log "Running full backup"

528fi

529

530mkdir -p "$BASE_DIR"

531log_parameters

532

533# loop over all source folders and include them in the backup

534for d in $SRC_DIRS; do

535 # make sure we have trailing slash for rsync

536 [[ "$d" == */ ]] || d="$d/"

537 echo "== Base dir: $d" >> /tmp/${PROCID}.log

538

539 # do a full backup

540 if [ "$FULL_BACKUP" == "1" ]; then

541 backup_full "$d" "$BASE_DIR" # "$@"

542 else

543 # if the sub folder does not exist in the last backup, do a full backup

544 if [ ! -d "${LAST_BASE}/$d" ]; then

545 backup_full "$d" "$BASE_DIR" # "$@"

546 else # if the sub folder in last backup exists, do an incremental backup

547 backup_incremental "$d" "${BASE_DIR}" "${LAST_BASE}" # "${@}"

548 fi

549 fi

550done

551

552## update parameters after successful lrun

553log_parameters

554

555# cleanup temp files

556cleanup

557

558exit 0;